Life Is Messy

Demo queries have this nasty habit of being clean. Even using a pit of despair like Adventure Works or World Wide Importers, it’s easy to craft demo queries that fit the scenario you need to make yourself look like a genius. Stack Overflow, in all its simplicity, makes this even easier (lucky me!) because there’s nothing all that woogy or wonky to dance around.

While working with a client recently — yes, Brent lets me talk to paying customers — we found a rather tough situation. They were using Windowing functions over one group of columns to partition and order by, but the where clause was touching a totally different group of columns.

The query plan wasn’t happy.

Users weren’t happy.

I was still dizzy from being on a boat.

Optimal?

If you’ve been reading the blog for a while, you may remember this post from about two years ago. Over there, we talked about a POC index, a term popularized by Itzik Ben-Gan.

But how does that work when your query has other needs?

Let’s meet our query!

|

1 2 3 4 5 6 7 8 |

DECLARE @Score INT, @rn INT SELECT @Score = p.Score, @rn = ROW_NUMBER() OVER ( PARTITION BY p.OwnerUserId ORDER BY p.Score DESC, p.CreationDate DESC ) FROM dbo.Posts AS p WHERE p.PostTypeId = 1 AND p.CommunityOwnedDate IS NULL AND p.LastActivityDate >= '2016-01-01' |

We have a Windowing function that partitions and orders by three columns, and a where clause that uses three other columns. If we stick a POC index on the Posts table that prioritizes performance of the Windowing function, what happens? I’m going to put the three where clause columns in the include list to avoid troubleshooting key lookups later.

|

1 |

CREATE UNIQUE NONCLUSTERED INDEX ix_helper ON dbo.Posts (OwnerUserId, Score DESC, CreationDate DESC, Id) INCLUDE (PostTypeId, CommunityOwnedDate, LastActivityDate) |

Now when I run the query, here’s my plan with — you guessed it! A missing index request.

The missing index request is for nearly the EXACT OPPOSITE INDEX we just added. Oh boy.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

/* Missing Index Details from SQLQuery12.sql - NADAULTRA\SQL2016E.StackOverflow (sa (67)) The Query Processor estimates that implementing the following index could improve the query cost by 96.8793%. */ /* USE [StackOverflow] GO CREATE NONCLUSTERED INDEX [<Name of Missing Index, sysname,>] ON [dbo].[Posts] ([CommunityOwnedDate],[PostTypeId],[LastActivityDate]) INCLUDE ([CreationDate],[OwnerUserId],[Score]) GO */ |

96.8%! I must be a bad DBA. I made a backwards index. I hope someone automates this soon.

Okay, so, let’s create an index close in spirit to our original index. Just, y’know, backwards.

|

1 |

CREATE UNIQUE NONCLUSTERED INDEX ix_helper2 ON dbo.Posts (CommunityOwnedDate, PostTypeId, LastActivityDate, Id) INCLUDE (CreationDate, OwnerUserId, Score) |

When we re-run our query, what happens?

Oddball

Let’s pause here for a minute. Stuff like this can seem witchcrafty when it’s glossed over in a blog post.

The index I created is awesome for the Windowing function, and the index that SQL registered as missing was awesome for the where clause.

When I have both indexes, SQL chooses the where-clause-awesome-index because it judges the query will be cheaper to deal with when it can easily seek and filter out rows from the key of the nonclustered index, and then pass only those rows along to the Windowing function.

Now, it can still do this with the Windowing-function-awesome-index, because the where clause columns are included, just not as efficiently as when they’re key columns.

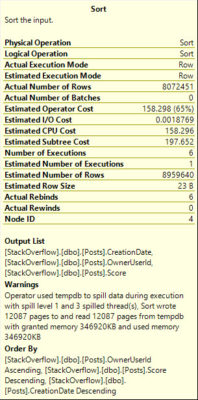

The trade-off here is a Sort operation to partition and order by for the Windowing function, but SQL says that will still be far cheaper to sort a bunch of data

Time bomb

If you’re query tuning with a small amount of data, you’ll take a look at these query costs, stick with the where clause awesome index, and go get extra drunk for doing a wicked good job.

Here they are back to back.

What happens when we include more data?

Going back a year further, to 2015, the costs are close to even. The Sortless plan costs about 159 query bucks, and the Sorted plan costs about 124 query bucks.

Going back to 2013, the Sortless plan now costs 181 query bucks, the Sorted plan costs 243 query bucks, and the Sort spills to disk.

So what’s the point?

Missing index requests don’t always have your long term health in mind when they pop up. Some may; others may just be a shot and a beer to get your query past a hangover.

If I go back and run the ‘2013’ query with only the original index on there (the one that helps the Windowing function), there’s still a missing index request, but with a lower value (75% rather than 98%). Part of this is due to how costs are estimated and where SQL expects the sort to happen (disk vs memory).

In our case, the Sort was a bit of a time bomb. At first, it didn’t matter. As we included more data, it got worse. This is the kind of challenge that a lot of developers face as their app goes from a couple hundred clients to a couple thousand clients, and exactly the kind of thing our Critical Care helps with.

Thanks for reading!

Brent says: this isn’t just about missing index hints in query plans, either: it’s also a great example of why you have to be a little bit careful with the missing index DMV recommendations, too. sp_BlitzIndex would report this index as missing, and you won’t know which queries are asking for it (or whether they’ve gotten better or worse.) Every now and then, you’ll add a missing index and performance will actually get worse – so you’ve also gotta be looking at your top resource-intensive queries via sp_BlitzCache. In this example, after you’ve added Clippy’s index, the now-slower query would show up in sp_BlitzCache with no missing index hints, and you’d need to know how to hand-craft your own.

18 Comments. Leave new

Terrific example of short term gains, long term problems. Out of curiosity, do you think a CTE would help any? Perhaps both indexes could be used, if truly needed…

Perhaps, but it would be a unique set of circumstances that would make a) the maintenance of both indexes for inserts, updates, and deletes, and b) the additional join that would be necessary to bring the correct results together worthwhile.

Not saying never, and it’s totally something I would explore if performance still wasn’t up to snuff. I guess it would also depend a bit how much other queries could use those indexes.

Thanks!

What happens if you put the columns used by the windowing function in the key portion of the index instead of the include? That way they are already sorted. Something like:

CREATE UNIQUE NONCLUSTERED INDEX ix_helper2 ON dbo.Posts (CommunityOwnedDate, PostTypeId, LastActivityDate, OwnerUserId, Score DESC, CreationDate DESC, Id);

There are heavily diminishing returns on key columns in an index after the 3rd or 4th one, especially for ordering elements. What good would ordering two columns at the very end descending do? It’s not like SQL orders each key column specially. If you want SQL to prioritize ordering, you need to put those columns at the beginning. Functionally, there’s not a lot of difference between this and the INCLUDE columns I used.

Great article, great example of why testing is so important. I do have a question, why is the column “id” listed in the index key and it’s not part of the query statement?

It’s the PK/CX on the table, so there’s no penalty for having it listed out. It’s part of the NC index anyway.

Great question though!

+1 Brendan Morgan

It should be the best option. So I’m also interested whether it was considered.

I’d definitely prefer this one over both on unknown to me customers database. But you forgot to put p.Score into INCLUDED list

You should try running sp_BlitzIndex sometime 😉

I’ll play – change the clustered index to LastActivityDate and create a filtered index for

WHERE p.PostTypeId = 1 AND p.CommunityOwnedDate IS NULL

then index on the columns for the windowing function

(p.OwnerUserId , p.Score DESC, p.CreationDate DESC)

Then our query is fast & damn the rest of the workload.

I’ll wait for you to download the SO data dump to try that one 🙂

I don’t quite agree with “Time bomb” part, the first index offers no advantages in real life situation. Going back in time only shows how things evolve when you select bigger part of the table not more data. You are effectively reducing the benefit of the where clause and thus making the index less important.

There are probably no real life situations where you would want to select most or all rows from a table, so optimizing for WHERE is more beneficial than optimizing an OVER clause.

So data never grows, no one writes reporting queries, and no one has a data warehouse. Got it.

Your point is valid only if you select most of the table rows… this is not growth, it will be valid for a table with 10 rows where you select 9 of them, but will fail if you select 1 000 of 1 000 000.

So… when data grows you never end up querying more data? That’s another interesting assertion.

In the previous example my data did grow from 10 rows to 1 000 000 and I did query 1000 instead of 9 rows, didn’t I?

You grew one number by 100,000x and the other number by 111x. I’m not too good at math, but that doesn’t seem quite right to me.

Ar4i – you may wanna take a break and think about the kinds of comments you’re leaving.

“There are probably no real life situations where you would want to select most or all rows from a table”

HAHAHAHAHAHAHAHAHAHA

(draws deep breath)

HAHAHAHAHAHAHAHAHAHA

Oh, Ar4i, you have such sights to see when you get out in the real world, I tell you what. The things we’ve seen…

I do agree there are weird real life situations which are a result of bad DB design. So I take a note to never say never.

Still if you optimize for whole table selects then this would almost always require a different kind of index or none at all.